AI News Today: Breakthroughs, Skepticism, and What We Know

AI's "Garbage In, Garbage Out" Problem: Why Human Skepticism Is the Key to a Better Future

The AI Awakening: Skepticism as a Catalyst

We're at a fascinating crossroads, folks. The AI revolution is barreling forward, promising to reshape everything we know. But whispers of doubt are growing louder, and I think that's fantastic. Why? Because skepticism, especially from the very people building these systems, is exactly what we need to refine AI and ensure it truly benefits humanity.

Think about it. A dozen AI raters are now cautioning friends and family about the pitfalls of generative AI. They've seen the sausage being made, so to speak, witnessing the inaccuracies and flaws firsthand. We're talking about the people training these models – the ones feeding the beast! When they start raising red flags, we need to listen.

One AI rater, Krista Pawloski, who works on Amazon Mechanical Turk, recounts almost misclassifying a tweet containing a racial slur. Scary stuff, right? It’s a stark reminder that even the most sophisticated algorithms are still vulnerable to human error and bias. And Brook Hansen, a data work veteran since 2010 who's trained some of Silicon Valley's biggest AI models, is now presenting on the ethical and environmental impacts of AI. This isn't just a tech problem; it's a societal one.

This reminds me of the early days of the printing press. Suddenly, information was democratized, but so was misinformation. People questioned the new technology, worried about its impact on society. That initial skepticism, that critical eye, ultimately led to the development of journalistic standards, fact-checking, and a more informed populace. Isn’t it possible that AI is at a similar juncture?

The NewsGuard audit paints a concerning picture. While non-response rates from AI models have thankfully dropped to zero, the likelihood of repeating false information has almost doubled in just one year. That's not progress, that's a regression! It's like AI is becoming more confident in its lies.

And here's where it gets really interesting: This distrust isn't just anecdotal. It's reflected in consumer behavior. A Morning Consult report shows a widening gap in AI adoption based on income. High-income earners are diving headfirst into AI tools like Gemini and ChatGPT, while lower-income households are sticking with value-oriented brands. It's almost as if access to, and trust in, AI is becoming a luxury good. Is this the kind of digital divide we want to create?

The Human Element: Ethics and the Future of AI

But here's the silver lining. This skepticism, this growing awareness of AI's limitations, is forcing us to confront the ethical and environmental implications head-on. Brook Hansen and Krista Pawloski's presentation at the Michigan Association of School Boards is a perfect example. They're not just talking about the cool features; they're talking about the real-world consequences.

One Google AI rater even expressed concern about the company's approach to AI-generated responses to health questions, citing a lack of medical training among raters. I mean, come on. We're talking about people's health here! This isn't some theoretical exercise; it's life and death.

Another Google AI rater with a history degree reported that Google's AI refused to answer questions about the history of the Palestinian people but readily provided information about the history of Israel. When I first heard this, I honestly just sat back in my chair, speechless. This is the kind of bias that can perpetuate harmful stereotypes and historical inaccuracies.

And let's not forget the "garbage in, garbage out" principle. If we're feeding AI models biased or inaccurate data, we're only going to get biased or inaccurate results. It's like teaching a child with a flawed textbook – they're going to learn the wrong lessons.

But here's the exciting part: We have the power to change this. We can demand transparency, accountability, and ethical considerations from AI developers. We can support initiatives that promote responsible AI development. We can educate ourselves and others about the potential risks and benefits of AI.

In fact, I saw a great comment on Reddit the other day that really resonated with me. Someone wrote, "AI is a tool, and like any tool, it can be used for good or for evil. It's up to us to make sure it's used for good." Isn’t that the truth?

And, hey, I understand the initial defensiveness and frustration that some attendees at the Michigan Association of School Boards conference felt. Change is scary. But ignoring the problem won't make it go away. We need to embrace the challenge and work together to build a better future.

Human Input: The Only Way Forward

So, what does all of this mean? It means that the future of AI isn't just about algorithms and data; it's about people. It's about our values, our ethics, and our collective responsibility to shape this technology in a way that benefits all of humanity. The initial skepticism is not a roadblock; it's a vital ingredient for progress. Let's listen to the voices of caution, learn from our mistakes, and build an AI future we can all be proud of.

Previous Post:Navy Federal EFTA Settlement: The Breakdown for Members

Next Post:Kroger: what's really going on

Related Articles

Dubai: Influencer Death and What We Know

Generated Title: The Dichotomy of Fame: When Social Media Success Collides with Real-World Consequen...

The HVAC Racket: Repair vs. Replacement and How to Pick a Company That Won't Screw You Over

So, let me get this straight. The Los Angeles Angels, a professional baseball franchise supposedly w...

Artificial General Intelligence: What It Is and Why It's Our Next Great Leap

The AGI Goalpost Has Moved. And That’s the Best Thing That Could Have Happened. There’s a strange ne...

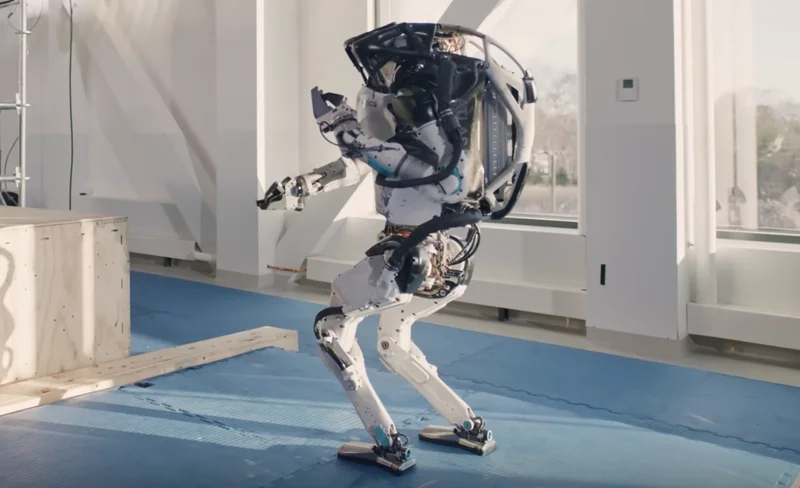

Robot Ambitions: Solid-State Batteries and AI Chips

Alright, let's talk about Xpeng and their sudden robot obsession. The Guangzhou-based EV maker says...

Anduril's AI Jet 'Fury' Takes Flight: Why This Changes Everything for Aerial Warfare

I have to be honest with you. When I first saw the news that Anduril's unmanned jet "Fury" makes fir...

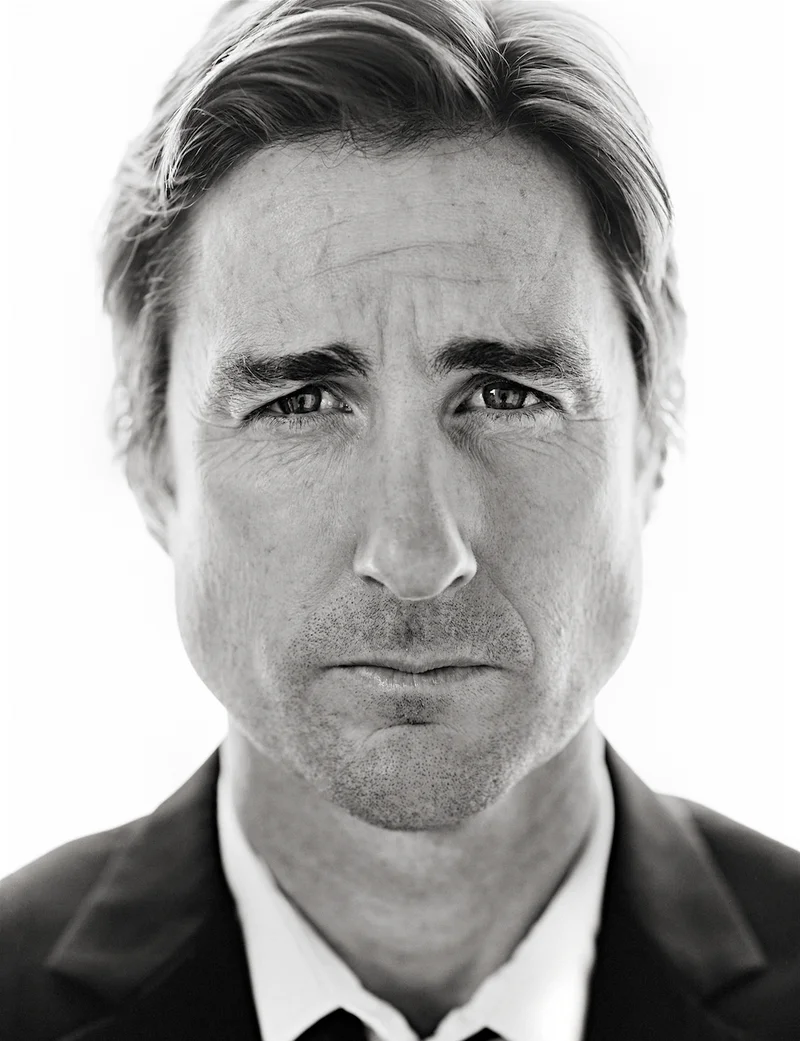

AT&T's Luke Wilson Campaign: A Masterclass in Nostalgia and Brand Storytelling

I was scrolling through my feeds the other night, half-watching a stream, when a familiar face poppe...